AI – It’s your move

Last year’s World Chess Championship in Dubai saw reigning Champion Magnus Carlsen and his challenger Ian Nepomniachtchi draw one game in what has been billed as the most accurate game of championship chess in history. That is, according to the best available computer analysis neither player made a move that appreciably lost nor gave any advantage. An article on the event noted that the analysis suggests that chess games at the top level have been getting progressively accurate, and more so since advanced computer analysis became truly available to players (tinyurl.com/2p8x9mb4).

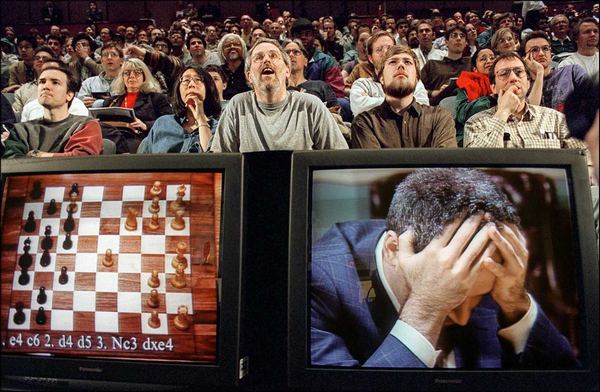

This is an instructive example of computers and humans interacting to provide increasing accuracy and effectiveness. The event which saw then World Champion Gary Kasparov defeated by a computer, in 1997, has been seen as a landmark in the progress of the development of machine thinking, and the point at which computers became better than humans (even if, at the event, the computer required human adjustment to its programming between matches to achieve the feat). In 2016, a computer programme called AlphaGo defeated one of the best human minds at the complex Japanese game of Go. Go is considered harder for machines to play, since it is much more intuitive and probabilistic than chess, but AlphaGo was trained to play it through machine learning. Again, the expectation is that the best Go players will now improve through using computers to analyse their games for flaws and plan their strategies.

It is now a commonplace in chess circles to talk about ‘computer moves’ that appear utterly unintelligible to the human mind, but which the computer proves make sense, about 14 moves down the line. The computer can now see lines that the human mind just wouldn’t be able to begin to consider (because they often violate the general principles of play in the immediate instance).

It is now a commonplace in chess circles to talk about ‘computer moves’ that appear utterly unintelligible to the human mind, but which the computer proves make sense, about 14 moves down the line. The computer can now see lines that the human mind just wouldn’t be able to begin to consider (because they often violate the general principles of play in the immediate instance).

Researchers are now trying to train computers to play more like humans. They have trained a neural net to be able to identify players just by their moves and playing style, which will, in the future, enable computers to offer a more bespoke approach to using computer assistance. ‘Chess engines play almost an “alien style” that isn’t very instructive for those seeking to learn or improve their skills. They’d do better to tailor their advice to individual players. But first, they’d need to capture a player’s unique form’ (tinyurl.com/263ej48a). Or, as the paper’s abstract puts it: ‘The advent of machine learning models that surpass human decision-making ability in complex domains has initiated a movement towards building AI systems that interact with humans’ (tinyurl.com/2p9a7fwk). There are considerable commercial applications for such capacity, not to mention the police and security implications of such stylometric analysis that goes beyond the chess board.

In the field of sport, we’ve seen technology appear to improve umpiring decisions, particularly the ever-controversial leg-before-wicket rule in cricket. The introduction of ball tracking technology (alongside Hotspot and ‘Snicko’) has not only improved the accuracy of decisions finally taken, but they have also influenced the umpires’ decision-making in the first place. What they previously, to the naked eye, might have considered not out has been empirically proven to be a legal dismissal. Umpires have learned to adapt and reduce the chance of themselves being overruled by the machine.

Perhaps the most extreme manifestation of this human-computer interaction was announced in December last year: human brain cells in a Petri dish were trained to play a computer game (tinyurl.com/2p9efekb). That they learnt faster than computer AI is itself intriguing, and is indicative of the issues that have been around development of AI for a long time. While computers have promised a general adaptive intelligence since they were first conceived of, a great deal of research has yet to produce tangible results beyond the very restricted rules-based situations in chess or Go.

The dream of driverless cars, for example, has taken a great deal of a knock. In practice the cars tend not to respond well to the chaotic environment of a real road (which has led to a number of fatalities during the testing of these machines. The fact that their sensors can be fooled by something resembling the white lines of a road (or even worse, actually spoofed into thinking something is a road by malicious actors) has seriously dented the idea that there will be a general roll-out of driverless vehicles on our roads any time soon.

This ignores the possibility of even more dangerous things such as hackers gaining control of automated driving systems and using them to make the car do what they want.

This is itself alarming, as military applications of AI are being increasingly deployed in the real world (and form part of the current military competition between the US and China. Last year, the British government announced that its forces had deployed AI in combat manoeuvres:

‘Through the development of significant automation and smart analytics, the engine is able to rapidly cut through masses of complex data. Providing efficient information regarding the environment and terrain, it enables the Army to plan its appropriate activity and outputs […] In future, the UK armed forces will increasingly use AI to predict adversaries’ behaviour, perform reconnaissance and relay real-time intelligence from the battlefield’ (tinyurl.com/5nrbxv7t).

Of course, some of this is puffery to promote the armed forces but the AI competition between powers is real. It isn’t just in the development labs, though. In the recent war between Azerbaijan and Armenia, the Azerbaijanis used AI-assisted drones to considerable effect:

‘Relatively small Azerbaijani mobile groups of crack infantry with light armor and some Israeli-modernized tanks were supported by Turkish Bayraktar TB2 attack drones, Israeli-produced loitering munitions, and long-range artillery and missiles, […] Their targeting information was supplied by Israeli- and Turkish-made drones, which also provided the Azerbaijani military command with a real-time, accurate picture of the constantly changing battlefield situation’ (tinyurl.com/4cwb37au).

Wikipedia defines a loitering munition as: ‘a weapon system category in which the munition loiters around the target area for some time, searches for targets, and attacks once a target is located’ (tinyurl.com/bdhmmvp7).

The applications are still limited, but given that drone swarm displays have become as common and spectacular as fireworks, it’s clear that the technology exists for serious damage to be done on a wide scale with these devices, and some of the finest minds in the world are looking to make them even more lethal and autonomous to combat potential threats to communication lines.

Tellingly, in parliamentary answers, the British government refuses to back a moratorium on autonomous killing devices: ‘the UK will continue to play an active role[…], working with the international community to agree norms and positive obligations to ensure the safe and responsible use of autonomy’ (tinyurl.com/2p8vbkr5).

The fact is that no-one quite knows where this will end. Futurologists talk of an event called ‘the Singularity’, a point in time beyond which we cannot make meaningful predictions and after which is a completely unrecognisable world. The likeliest cause of an imminent singularity, they claim, is the invention of superhuman intelligence, capable of redesigning and improving itself. This would in turn lead, so they claim, to new innovations coming so fast that they would be obsolete by the time they were implemented.

At present, this remains theoretical, and decades of research into artificial intelligence and machine learning still has not provided even a theoretical route to a machine capable of general intelligence, as opposed to a specific task-focused capability.

‘The common shortcoming across all AI algorithms is the need for predefined representations[…]. Once we discover a problem and can represent it in a computable way, we can create AI algorithms that can solve it, often more efficiently than ourselves. It is, however, the undiscovered and unrepresentable problems that continue to elude us’ (tinyurl.com/26ve3zky).

Computers can work very effectively at what they do, but they lack intention or volition. At www.chess.com/computer-chess-championship computer engines tirelessly and sterilely play each other at chess, endlessly making moves with no love for the game nor pleasure in victory.

We can look forward to the impact of AI on our lives being a co-operative effort between humans and machines. This article, for instance, was written with the assistance of Google’s searching algorithms to bring up relevant articles at a moment’s notice, replacing hours of research in a physical library.

P.S.